What are LLM hallucinations?

LLM hallucinations occur when an AI agent produces responses that are wrong, irrelevant, or contradictory to the context or known facts. While these mistakes may appear harmless, they can cause misinformation, miscommunication, or frustration—especially in critical use cases.

Examples of scenarios where hallucinations can have significant consequences:

Case 1: Banking AI Agent

- Scenario: A customer asks, “What is my loan eligibility?”

- Hallucination: The AI agent confidently responds, “You’re pre-approved for $50,000!” without checking the user’s financial profile.

- Reality: The customer applies, only to find they are ineligible.

- Impact: Trust in the bank’s AI system goes haywire, affecting customer loyalty negatively.

Case 2: Lead Generation AI Agent

- Scenario: A potential customer asks, “Does your platform integrate with Salesforce?”

- Hallucination: The AI agent tells them, “Yes, we have complete Salesforce integration,” when this is not true.

- Reality: The customer signs up only to learn the integration does not exist.

- Impact: The company loses credibility, possibly causing customers to leave bad reviews or churn.

Case 3: Travel AI Agent

- Scenario: A traveler asks, “Do I need a visa to visit Japan?”

- Hallucination: The AI agent confidently says, “No visa is required for any nationality,” whereas some countries have special requirements to enter Japan.

- Reality: The traveler gets denied boarding at the airport.

- Impact: The customer’s travel plans are disrupted, leading to frustration and potential financial loss.

Case 4: E-commerce AI Agent

- Scenario: A shopper asks, “Is this product available in blue?”

- Hallucination: The AI agent assures them, “Yes, it’s in stock,” when it isn’t.

- Reality: The shopper goes through checkout only to find the item unavailable.

- Impact: The customer abandons the cart and loses trust in the platform.

Why do LLMs hallucinate?

Hallucinations in LLMs occur due to a set of factors that determine the response given by these models. Being aware of the causes will definitely help in enhancing the reliability and accuracy of AI outputs. Here is a detailed factor contributing to hallucinations.

1. Unclear or too broad statements

Ambiguous or nonspecific instructions result in the AI making broad assumptions or guesses. In the absence of clarity within the prompt, the model responds based on probability rather than specific information provided. Results include completely incorrect or irrelevant outputs.

2. Lack of domain knowledge

While LLMs are trained on a wide variety of general data, they do lack depth for more specialized fields. In cases where the model is being asked to provide insights into niche or technical subjects, superficial or even completely wrong information may be produced because of its insufficiency of expertise in that domain.

3. Lack of training data

Models learn from large datasets, but if these training data are incomplete or not well-covered in some areas, AI could struggle with topics that are not covered. Gaps in knowledge can indeed lead to hallucinations, where the AI either fabricates or misses critical details.

4. Uncertainty in language

LLMs represent probabilistic models of text creation, working out the pattern from huge datasets. In cases of uncertainty, the AI would provide responses that would make sense according to the statistical likelihood but may be misleading or factually incorrect.

5. Divergence in source reference

With LLMs, most of the content is generated from external sources or references. If the model draws from conflicting or incorrect sources, then it may elicit information in such a manner as to create contradictions or misrepresentations of the original data.

6. Jailbreak prompts for exploitation

Some kinds of prompts can tend to fool AI into getting around its safety filters and eventually come up with harmful, biased, or inappropriate content. The design of these kinds of prompts is therefore to trick the model into producing undesired outputs.

7. Reliance on incomplete or contradictory datasets

It can also reproduce inconsistent information if such training data is contradictory, incomplete, or inconsistent. The AI might give fragmented answers, misleading statements, or even contradictory responses.

8. Overfitting and Lack of Novelty

When a model overfits, it becomes too strongly attached to the specific patterns it learned during training and cannot generalize to new or unfamiliar inputs. Consequently, the AI ends up regurgitating familiar but possibly outdated or irrelevant information when presented with new questions.

9. Guesswork from vague prompts

If a prompt is too ambiguous or lacking in context, the AI will make speculative leaps to fill in the gaps. This then leads to responses which may sound plausible but have no basis in fact and sometimes are not relevant to the original query.

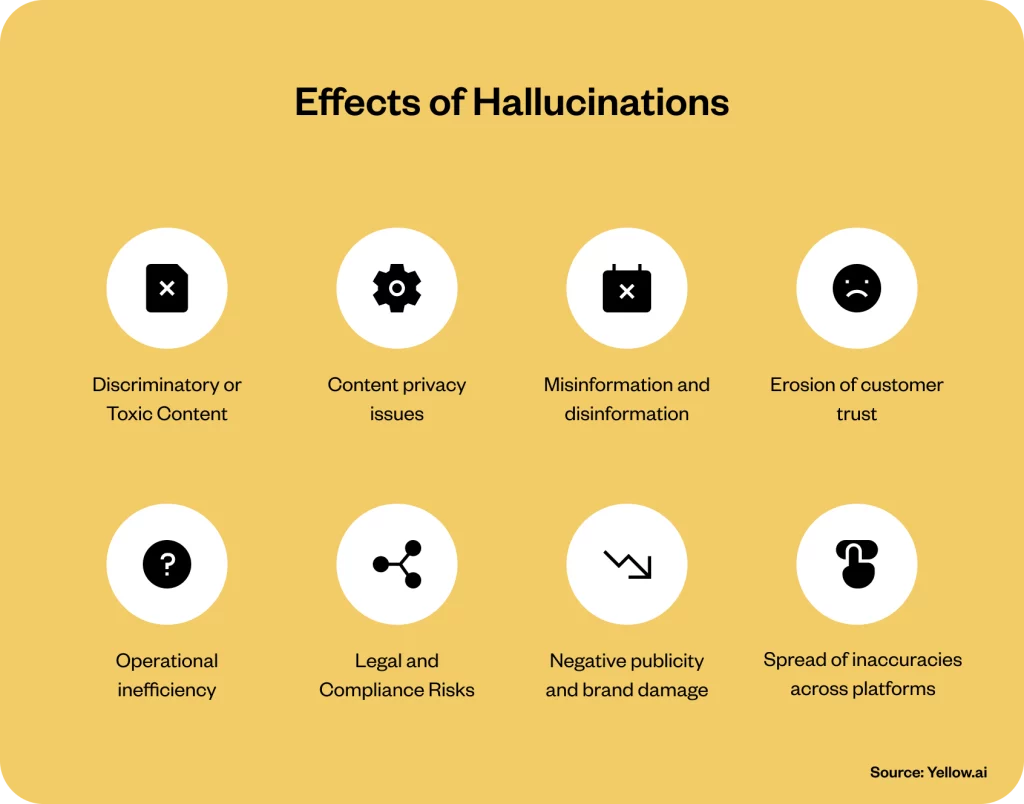

Effects of Hallucinations

1. Discriminatory or Toxic Content

In some cases, this hallucination may lead the LLM to generate biased, offensive, or even harmful content. This can have wide-reaching effects on reputation damage, especially in sensitive use cases like hiring or customer service.

2. Content privacy issues

Hallucinations can result in an AI generating sensitive or confidential information inadvertently, posing serious risks for data privacy and compliance. This is a particular concern in areas where models work with personal or proprietary information.

3. Misinformation and disinformation

One of the major risks of hallucinations involves the proliferation of incorrect or misleading information. Misinformation can mislead users, leading to poor decision-making or even harm, in industries such as healthcare, financial services, and education.

4. Erosion of customer trust

The more users experience hallucinations, the less they will trust the AI system. This will lead to disengagement from and a lack of adoption of AI-powered tools, particularly those with customer-facing interactions.

5. Operational inefficiency

Hallucinations create the need for constant corrections and clarifications, which make a waste of time and resources. This may affect general productivity and rise in operational costs if integrated into critical business workflows.

6. Legal and Compliance Risks

For regulated industries, generating content that is wrong or inappropriate may involve legal consequences. Missteps around hallucinations can result in compliance violations that might further lead to fines, lawsuits, and/or other legal consequences.

7. Negative publicity and brand damage

If hallucinations become public or are repeatedly experienced by users, they can lead to negative publicity and a damaged brand image. This kind of damage can be hard to reverse and may have enduring consequences for a company’s reputation.

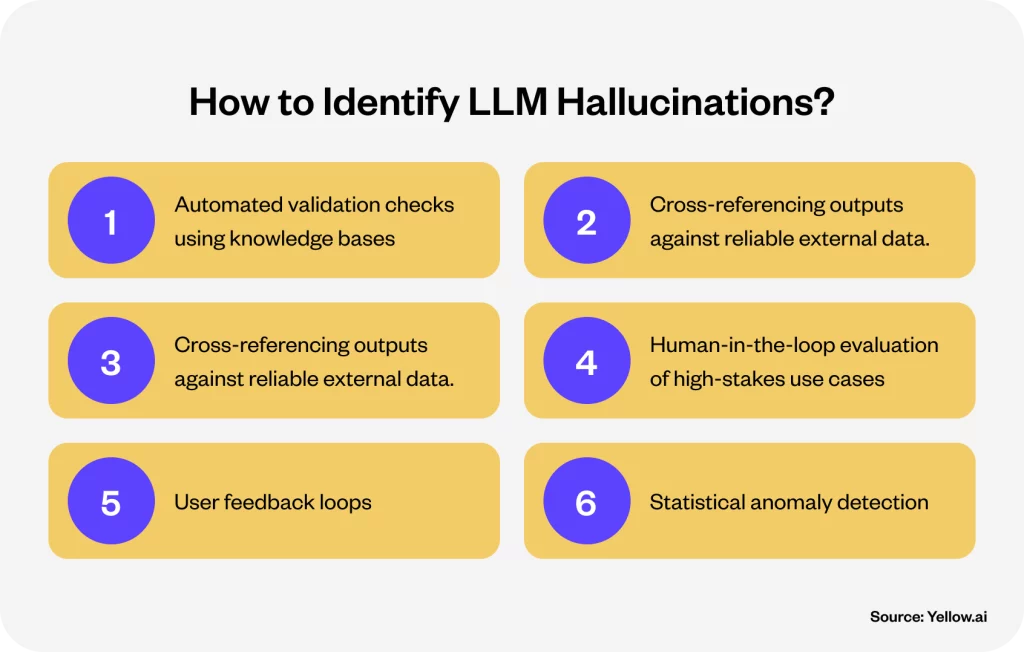

How to identify LLM hallucinations?

Detection of LLM hallucinations, in general, is vital to retain the accuracy, relevance, and reliability of AI responses. It will involve a mix of automated validation tools, cross-referencing from independent sources, and human moderation. Following is a description of major ways hallucinations in LLMs can be detected, with examples added to provide clarity to understand them better:

1. Automated validation checks using knowledge bases

Automated validation checks ensure that LLMs’ responses are aligned with trusted, pre-set knowledge bases, serving as benchmarks for factual correctness. This is often made from curated sources that are accurate and verified. When the AI generates a response, it cross-checks against these sources. If the generated output conflicts with the data set in the knowledge base, such a response is flagged as potentially erroneous.

For instance, if an AI gives a financial figure for a company’s revenue that does not align with the most up-to-date, verified figure from a real-time database, such a response is flagged as wrong. Self-editing occurs automatically, or a review is sought from an expert:

2. Cross-referencing outputs against reliable external data.

These LLMs may connect to an external source such as APIs, databases, or real-time data streams. Cross-referencing is a process where the AI would support its output using updated information from reliable sources. This would mean that by referring to such external references, the model can verify the accuracy of its response before it produces that response to the user.

For example, a customer support AI may go ahead and provide outdated information about a product return policy. It automatically checks against the current policy through an updated product database or API. If there is any mismatch, the model will either correct the information on its own or display a message indicating that it is retrieving more accurate information.

3. Human-in-the-loop evaluation of high-stakes use cases

The application will be where accuracy in the responses is critical, like in health, finance, or legal areas. For human-in-the-loop evaluation, the AI-generated responses are reviewed by human experts, especially when the model is uncertain or involves high-risk topics in the query. This assures that the AI’s output follows domain-specific knowledge, legal regulations, or ethical standards.

For instance, in the medical domain, an AI might propose a symptom-based diagnosis for a patient. Before the actual relaying of such a suggestion to a patient or its caregiver, a healthcare expert reviews the response for confirmation that the diagnosis is appropriate and supported by medical standards.

4. Checks for consistency within the conversation itself

LLMs can be designed to be consistent in a conversation through ongoing assessment of new responses against prior ones. If, within an ongoing conversation, a model presents information that is conflictive or inconsistent with respect to previous information provided, it could easily be flagged as a hallucination. Consistency checks ensure the AI stays logically coherent and contextually appropriate.

For example, if a user asks an AI what the hours are of a store and later in the conversation asks the question again, the model should respond with the same answer unless the hours have changed. If the AI provides different store hours in successive responses, that might be a hallucination or other error in the model’s reasoning and would be flagged for review.

5. Statistical anomaly detection

Statistical anomaly detection uses machine learning algorithms to monitor patterns in the model’s responses. The AI generates responses based on statistical probabilities, and when an output deviates significantly from normal conversational patterns or expected results, it can be flagged as an anomaly. These systems track syntax, sentiment, and factual consistency to identify any outputs that seem out of place or illogical.

For example, an AI engineered for customer support, while supposed to be answering product-related questions, suddenly begins to talk about irrelevant topics such as weather patterns or historical trivia. This statistical anomaly is then flagged for review. These inconsistencies often indicate the occurrence of hallucinations where the model drifts off the topic.

6. User feedback loops

User feedback is crucial in detecting and correcting hallucinations. Users, while interacting with the AI and noticing inaccuracies or problem responses, can flag those. This feedback becomes invaluable to the training of the AI to avoid similar mistakes in the future. In time, patterns within feedback may indicate problems in the model’s performance that are systematic, hence forming the bedrock for refinement.

For instance, if the AI keeps giving incorrect details about product availability according to users, then this information is gathered and collated. This data will then retrain the model and help in fine-tuning future responses to reduce the occurrence of such hallucinations.

Strategies that Yellow.ai uses to minimize hallucinations

At Yellow.ai, we prioritize delivering accurate, reliable, and contextually relevant interactions. Our cutting-edge strategies are designed to mitigate hallucinations—instances where AI generates incorrect or irrelevant responses—ensuring seamless user experiences. Here’s how we do it:

1. Robust architecture with multistep evaluation

Our AI is built on a robust, multistep evaluation framework that carefully examines user inputs and the relevance of AI responses. Each response undergoes rigorous scrutiny to ensure it aligns with the user query before being sent. This minimizes errors and maintains the conversational flow.

2. Pivotal customer control with intelligent fallback handling

Yellow.ai agents are designed to gracefully handle ambiguous or irrelevant queries by:

- Executing fallback flows that guide users back on track with meaningful suggestions.

- Transferring conversations to human agents seamlessly when complexity requires expert intervention.

This dynamic adaptability ensures users always receive the support they need without confusion.

3. Bulk testing with predefined conversations

Before an AI agent goes live, it undergoes extensive bulk testing with predefined scenarios that simulate real-world queries. This proactive approach allows us to identify and address potential hallucination risks, ensuring a polished, reliable experience from day one.

4. Optimized prompting techniques

We leverage advanced prompting methods, such as:

- One-shot prompting for clear instructions that guide the AI in generating precise responses.

- Few-shot prompting to provide context-rich examples, enhancing the AI’s understanding of nuanced queries.

These techniques fine-tune the AI agent’s behavior for higher accuracy and contextual relevance.

5. Retrieval-augmented generation (RAG)

Yellow.ai integrates Retrieval-Augmented Generation, a cutting-edge method where the AI pulls information from a curated knowledge base in real-time. This ensures responses are grounded in accurate, up-to-date data, effectively eliminating the risk of hallucinations.

6. Domain-specific fine-tuning

Our AI agents are fine-tuned with domain-specific data to ensure they excel in understanding and responding to industry-specific terminology, scenarios, and customer needs. Whether it’s healthcare, finance, or retail, Yellow.ai agents deliver tailored, precise interactions every time.

Conclusion

Yellow.ai’s commitment to minimizing hallucinations enhances the trustworthiness of our AI-powered solutions. With advanced strategies like RAG and fine-tuning, our models outperform competitors by ensuring higher accuracy and relevance. Lower hallucination rates mean better user experiences, improved brand trust, and measurable operational benefits for businesses.