As businesses increasingly rely on AI-powered agents for customer support, traditional performance metrics are proving inadequate. These agents handle a diverse range of queries, and the way we measure their effectiveness must evolve. Let’s explore the key metrics businesses should track and how Yellow.ai’s Analyze module can help.

Why New Metrics Are Needed

AI agents are fundamentally different from human agents. While humans bring empathy and nuanced understanding, AI agents excel at scalability, speed, and consistency. To truly assess their performance, we need metrics that capture:

- The AI Agents understanding of user queries

- Response relevance

- Sentiment impacts on customer satisfaction

- Opportunities for continuous improvement

Tracking these dimensions ensures AI-driven support aligns with user expectations and delivers measurable value.

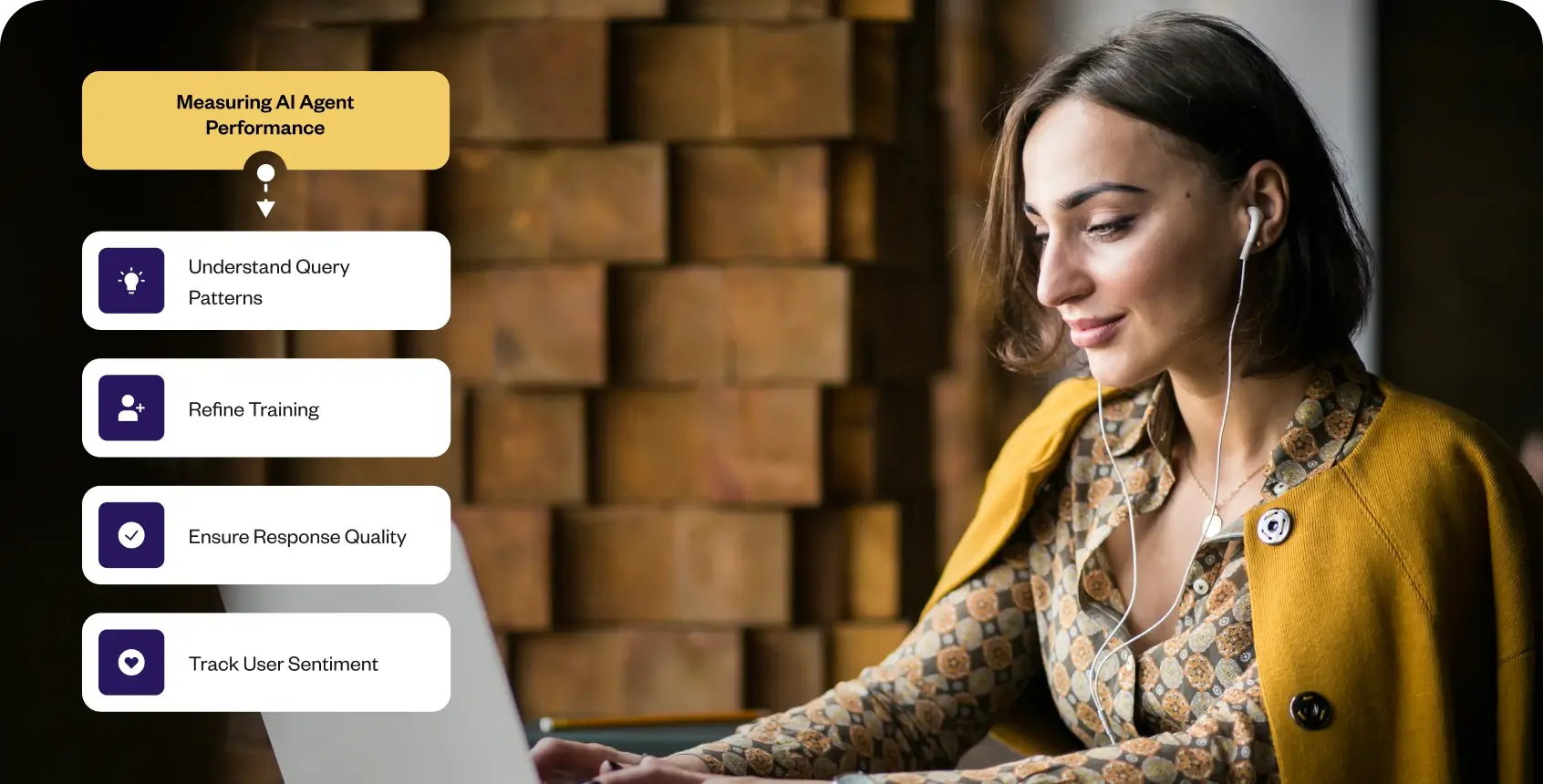

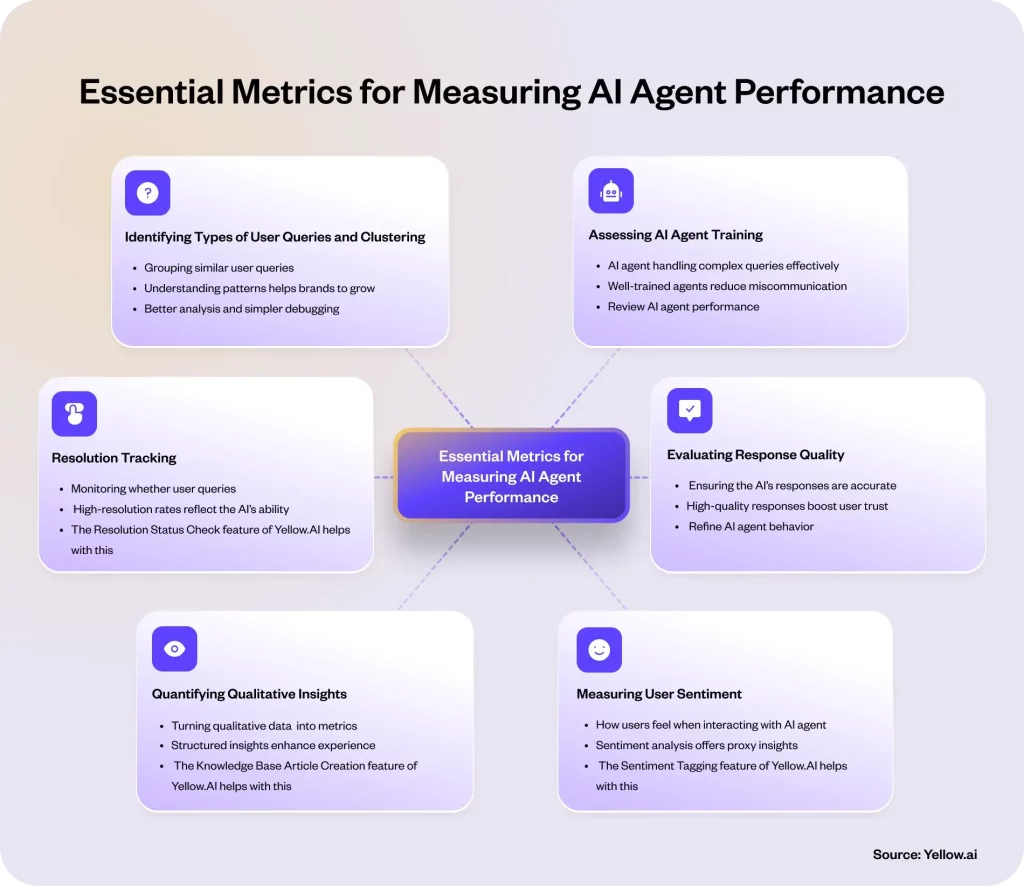

Essential Metrics for Measuring AI Agent Performance

- Identifying Types of User Queries and Clustering

- What it is: Grouping similar user queries to uncover common issues and intents.

- Why it matters: Understanding patterns helps brands focus on critical areas for improvement and optimize workflows.

- How Yellow.ai helps: The Topic Clustering feature in the Analyze module organizes conversations by themes, enabling better analysis and simpler debugging.

- Evaluating Response Quality

- What it is: Ensuring the AI’s responses are accurate, relevant, and actionable.

- Why it matters: High-quality responses boost user trust and engagement.

- How Yellow.ai helps: Conversation Logs provide detailed interaction histories, where brands can analyze responses and refine AI agent behavior based on real-world scenarios.

- Measuring User Sentiment

- What it is: Understanding how users feel during their interaction with the AI agent (positive, neutral, or negative sentiment).

- Why it matters: Sentiment analysis offers proxy insights into user satisfaction.

- How Yellow.ai helps: The Sentiment Tagging feature identifies user emotions, enabling teams to improve response strategies.

- Quantifying Qualitative Insights

- What it is: Turning qualitative data like user feedback into actionable metrics.

- Why it matters: Structured insights help improve AI agent training and enhance customer experience.

- How Yellow.ai helps: The Knowledge Base Article Creation feature transforms resolved conversations into training data for automation, reducing manual intervention over time.

- Resolution Tracking

- What it is: Monitoring whether user queries are resolved effectively by the AI agent.

- Why it matters: High-resolution rates reflect the AI’s ability to meet user needs autonomously.

- How Yellow.ai helps: The Resolution Status Check feature helps in measuring effectiveness of automation.

The Role of Yellow.ai’s Analyze Module

Yellow.ai’s Analyze module is specifically designed to address the challenges of measuring AI agent performance. Here’s how it empowers businesses:

- Chat Metrics: Gain insights into user interactions to identify what’s working and what needs improvement.

- Topic and Resolution Analysis: Drill down into specific topics to understand and refine resolution strategies.

- Automation Opportunities: Identify tasks that can be automated, reducing support workloads and enhancing efficiency.

By leveraging these tools, brands can ensure their AI agents continuously improve while delivering exceptional customer experiences.

Why It Matters

In an AI-first world, metrics like sentiment, response quality, and query clustering are no longer optional—they’re critical. These metrics allow businesses to:

- Enhance user satisfaction

- Build more robust AI models

- Reduce support costs through effective automation

With Yellow.ai, businesses have the tools they need to measure and optimize AI performance effectively. It’s not just about deploying AI agents—it’s about making them smarter, faster, and more impactful for users.