In the fast-paced world of technology, Large Language Models (LLMs) have taken center stage, capturing the attention of researchers, businesses, and tech enthusiasts alike. These colossal language models, with their remarkable capacity to understand, generate, and manipulate text on a massive scale, have piqued the interest of individuals and organizations eager to harness their capabilities. And we are seeing the industry buzzing with a slew of launches, from big tech companies to startups, all racing towards establishing themselves in this space.Yet, beneath the excitement surrounding LLMs lies the reality that comprehending these models can be quite challenging. They represent a complex blend of advanced technologies, data-driven insights, and intricate natural language processing.

In this guide, we demystify LLMs by delving into essential aspects like how they work, their diverse applications across industries, their advantages, limitations, and how to evaluate them effectively. So, let’s dive in and explore the world of LLMs, uncovering their potential and impact on the future of AI and communication.

What is a large language model (LLM)?

A Large Language Model is a language model known for its substantial scale, enabling the integration of billions of parameters to build intricate artificial neural networks.These networks harness the potential of advanced AI algorithms, employing deep learning methodologies and drawing insights from extensive datasets for the tasks of assessment, normalization, content generation, and precise prediction.

When compared to conventional language models, LLMs take on exceptionally large datasets, substantially augmenting the functionality and capabilities of an AI model. While the term “large” lacks a precise definition, it generally entails language models comprising no fewer than one billion parameters, each representing a machine learning variable.

Throughout history, spoken languages have evolved for communication, providing vocabulary, meaning, and structure. In AI, language models serve a similar role as the foundation for communication and idea generation. The lineage of LLMs traces back to early AI models like the ELIZA language model, which made its debut in 1966 at MIT in the United States.Much has changed since then.

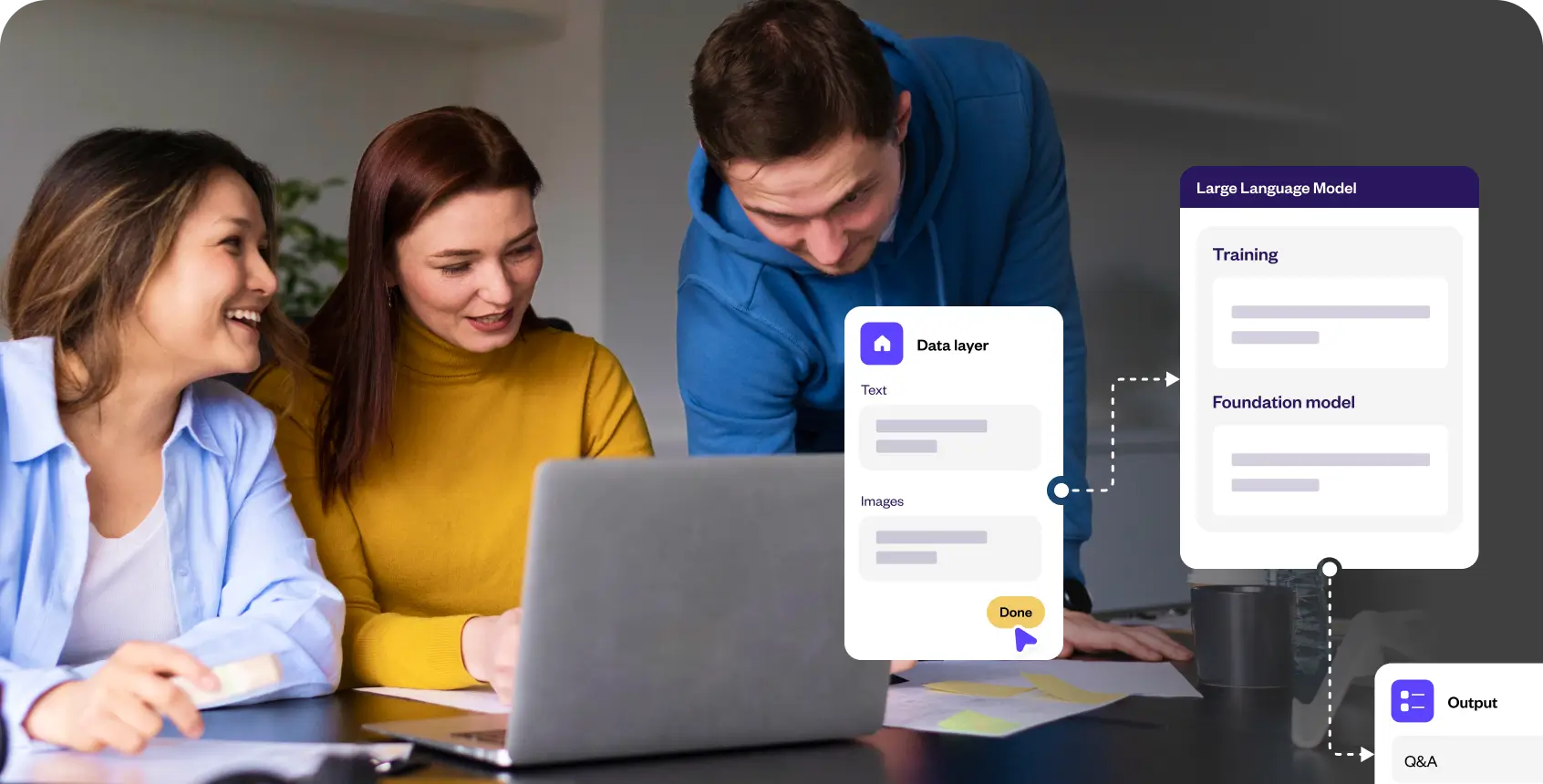

Modern-day LLMs commence their journey by undergoing initial training on a specific dataset and subsequently evolve through an array of training techniques, fostering internal relationships and enabling the generation of novel content. Language models serve as the backbone for Natural Language Processing (NLP) applications. They empower users to input queries in natural language, prompting the generation of coherent and relevant responses.

What is the difference between large language models and Generative AI?

LLMs and Generative AI both play significant roles in the realm of artificial intelligence, but they serve distinct purposes within the broader field. LLMs, like GPT-3, BERT, and RoBERTa, are specialized for the generation and comprehension of human language, making them a subset of Generative AI. Generative AI, on the other hand, encompasses a wide spectrum of models capable of creating diverse forms of content, spanning text, images, music, and more.

Having said that, LLMs are now multimodal, meaning that they can process and generate content in multiple modalities, such as text, images, and code. This is a significant advancement in LLM technology, as it allows LLMs to perform a wider range of tasks and interact with the world in a more comprehensive way. Multimodal LLMs such as GPT-4V and Kosmos-2.5, and PaLM-E are still undergoing major developments, but they have the potential to revolutionize the way we interact with computers.

Another way to think about the difference between generative AI and LLMs is that generative AI is a goal, while LLMs are a tool. Furthermore, it’s worth noting that while LLMs are a powerful tool for content generation, they are not the exclusive path to achieving generative AI. Different models, such as Generative Adversarial Networks (GANs) for images, Recurrent Neural Networks (RNNs) for music, and specialized neural architectures for code generation, exist to create content in their respective domains.

In essence, not all generative AI tools are built upon LLMs, but LLMs themselves constitute a form of generative AI.

Key components of large language models (LLM)

To comprehend the inner workings of an LLM, it’s essential to delve into its key components:

1. Transformers

LLMs are usually built upon the foundation of transformer-based architectures, which have revolutionized the field of NLP. These architectures enable the model to process input text in parallel, making them highly efficient for large-scale language tasks.

2. Training Data

The backbone of any LLM is the vast corpus of text data it’s trained on. This data comprises internet text, books, articles, and other textual sources, spanning multiple languages and domains.

3. Tokenization and Preprocessing

Text data is tokenized, segmented into discrete units such as words or subword pieces, and transformed into numerical embeddings that the model can work with. Tokenization is a critical step for understanding language context.

4. Attention Mechanisms

LLMs leverage attention mechanisms to assign varying levels of importance to different parts of a sentence or text. This allows them to capture contextual information effectively and understand the relationships between words.

5. Parameter Tuning

Fine-tuning the model’s hyperparameters, including the number of layers, hidden units, dropout rates, and learning rates, is a critical aspect of optimizing an LLM for specific tasks.

How do large language models (LLM) work?

The functioning of LLMs can be described through these fundamental steps:

- Input Encoding: LLMs receive a sequence of tokens (words or subword units) as input, which are converted into numerical embeddings using pre-trained embeddings.

- Contextual Understanding: The model utilizes multiple layers of neural networks, usually based on the transformer architecture, to decipher the contextual relationships between the tokens in the input sequence. Attention mechanisms within these layers help the model weigh the importance of different words, ensuring a deep understanding of context.

- Text Generation: Once it comprehends the input context, the LLM generates text by predicting the most probable next word or token based on the learned patterns. This process is iteratively repeated to produce coherent and contextually relevant text.

- Training: LLMs are trained on massive datasets, and during this process, their internal parameters are adjusted iteratively through backpropagation. The objective is to minimize the difference between the model’s predictions and the actual text data in the training set.

Simply put? Think of a LLM like a supercharged chef in a massive kitchen. This chef has an incredible number of recipe ingredients (parameters) and a super-smart recipe book (AI algorithms) that helps create all sorts of dishes. They’ve learned from cooking countless recipes (extensive datasets) and can quickly assess what ingredients to use, adjust flavors (assessment and normalization), whip up new recipes (content generation), and predict what dish you’ll love (precise prediction). LLMs are like culinary artists for generating text-based content.

Let’s take an example of a query on “I want to write an Instagram post caption on travel to Spain.” and deep dive into how the LLM works on this:

A Large Language Model (LLM) would begin by tokenizing the input sentence, breaking it down into individual units like “I,” “want,” “to,” “write,” “an,” “Instagram,” “post,” “caption,” “on,” “travel,” “to,” and “Spain.” It would then employ its deep learning architecture, often based on transformers, to comprehend the context and relationships between these tokens. In this specific query, the LLM would recognize the user’s intention to create an Instagram post caption about traveling to Spain, drawing upon its extensive training data consisting of diverse text corpora. Leveraging attention mechanisms, it would assign varying importance to different words, emphasizing “Instagram,” “post,” “caption,” and “Spain” as key components of the response. Subsequently, the model would generate a contextually relevant and coherent Instagram post caption that aligns with the user’s request, encapsulating the essence of a travel experience in Spain.

Use cases of large language models (LLM)

The versatility of LLMs has led to their adoption in various applications for both individuals and enterprises:

Coding:

LLMs are employed in coding tasks, where they assist developers by generating code snippets or providing explanations for programming concepts. For instance, an LLM might generate Python code for a specific task based on a natural language description provided by a developer.

Content generation:

They excel in creative writing and automated content creation. LLMs can produce human-like text for various purposes, from generating news articles to crafting marketing copy. For instance, a content generation tool might use an LLM to create engaging blog posts or product descriptions. Another capability of LLMs is content rewriting. They can rephrase or reword text while preserving the original meaning. This is useful for generating variations of content or improving readability.

Furthermore, multimodal LLMs can enable the generation of text content enriched with images. For instance, in an article about travel destinations, the model can automatically insert relevant images alongside textual descriptions. They can also enable the generation of text content enriched with images. Case in point, the model can automatically insert relevant images of travel worthy places alongside their textual descriptions.

Content summarization:

Also, LLMs excel in summarizing lengthy text content, extracting key information, and providing concise summaries. This is particularly valuable for quickly comprehending the main points of articles, research papers, or news reports. Additionally, this could be used to enable customer support agents with quick ticket summarizations, boosting their efficiency and improving customer experience.

Language translation:

LLMs have a pivotal role in machine translation. They can break down language barriers by providing more accurate and context-aware translations between languages. For example, a multilingual LLM can seamlessly translate a French document into English while preserving the original context and nuances.

Information retrieval:

LLMs are indispensable for information retrieval tasks. They can swiftly sift through extensive text corpora to retrieve relevant information, making them vital for search engines and recommendation systems. For instance, a search engine employs LLMs to understand user queries and retrieve the most relevant web pages from its index.

Sentiment analysis:

Businesses harness LLMs to gauge public sentiment on social media and in customer reviews. This facilitates market research and brand management by providing insights into customer opinions. For example, an LLM can analyze social media posts to determine whether they express positive or negative sentiments toward a product or service.

Conversational AI and chatbots:

LLMs empower conversational AI and chatbots to engage with users in a natural and human-like manner. These models can hold text-based conversations with users, answer questions, and provide assistance. For instance, a virtual assistant powered by an LLM can help users with tasks like setting reminders or finding information.

Related must read:

- Conversational AI – A complete guide for [2023]

- AI chatbot – The complete guide to chatbots

- Voice Bot – The complete guide to voice bots

- Generative AI – The Ultimate Guide [2023]

Classification and categorization:

LLMs are proficient in classifying and categorizing content based on predefined criteria. For instance, they can categorize news articles into topics like sports, politics, or entertainment, aiding in content organization and recommendation.

Image captioning:

Multimodal LLMs can generate descriptive captions for images, making them valuable for applications like content generation, accessibility, and image search. For instance, given an image of the Eiffel Tower, a multimodal LLM can generate a caption like, “A stunning view of the Eiffel Tower against a clear blue sky.”

Language-Image translation:

These models can translate text descriptions into images or vice versa. For example, if a user describes an outfit, a multimodal LLM can generate a corresponding image that captures the essence of the description.

Visual Question Answering (VQA):

Multimodal LLMs excel in answering questions about images. In a VQA scenario, when presented with an image of a cat and asked, “What animal is in the picture?” the model can respond with “cat.”

Product recommendation with visual cues:

In e-commerce, multimodal LLMs can recommend products by considering both textual product descriptions and images. If a user searches for “red sneakers,” the model can suggest red sneakers based on image recognition and textual information.

Automated visual content creation:

In graphic design and marketing, multimodal LLMs can automatically generate visual content, such as social media posts, advertisements, or infographics, based on textual input.

Benefits of large language models (LLM)

The benefits offered by LLMs encompass various aspects:

- Efficiency: LLMs automate tasks that involve the analysis of data, reducing the need for manual intervention and speeding up processes.

- Scalability: These models can be scaled to handle large volumes of data, making them adaptable to a wide range of applications.

- Performance: New-age LLMs are known for their exceptional performance, characterized by the capability to produce swift, low-latency responses.

- Customization flexibility: LLMs offer a robust foundation that can be tailored to meet specific use cases. Through additional training and fine-tuning, enterprises can customize these models to precisely align with their unique requirements and objectives.

- Multilingual support: LLMs can work with multiple languages, fostering global communication and information access.

- Improved user experience: They enhance user interactions with chatbots, virtual assistants, and search engines, providing more meaningful and context-aware responses.

Limitations and challenges of large language models (LLM)

While LLMs offer remarkable capabilities, they come with their own set of limitations and challenges:

- Bias amplification: LLMs can perpetuate biases present in the training data, leading to biased or discriminatory outputs.

- Ethical concerns and hallucinations: They can generate harmful, misleading, or inappropriate content, raising ethical and content moderation concerns.

- Interpretable outputs: Understanding why an LLM generates specific text can be challenging, making it difficult to ensure transparency and accountability.

- Data privacy: Handling sensitive data with LLMs necessitates robust privacy measures to protect user information and maintain confidentiality.

- Development and operational expenses: Implementing LLMs typically entails substantial investment in expensive graphics processing unit (GPU) hardware and extensive datasets to support the training process.

Beyond the initial development phase, the ongoing operational costs associated with running an LLM for an organization can be considerably high, encompassing maintenance, computational resources, and energy expenses.

- Glitch tokens: The use of maliciously designed prompts, referred to as glitch tokens, have the potential to disrupt the functionality of LLMs, highlighting the importance of robust security measures in LLM deployment.

Types of large language models (LLM)

Here’s a summary of four distinct types of large language models:

- Zero shot: Zero-shot models are standard LLMs trained on generic data to provide reasonably accurate results for general use cases. These models do not necessitate additional training and are ready for immediate use.

- Fine-tuned or domain-specific: Fine-tuned models go a step further by receiving additional training to enhance the effectiveness of the initial zero-shot model. An example is OpenAI Codex, which is frequently employed as an auto-completion programming tool for projects built on the foundation of GPT-3. These are also called specialized LLMs.

- Language representation: Language representation models leverage deep learning techniques and transformers, the architectural basis of generative AI. These models are well-suited for natural language processing tasks, enabling the conversion of languages into various mediums, such as written text.

- Multimodal: Multimodal LLMs possess the capability to handle both text and images, distinguishing them from their predecessors that were primarily designed for text generation. An example is GPT-4V, a more recent multimodal iteration of the model, capable of processing and generating content in multiple modalities.

Evaluation strategy for large language models

Having explored the inner workings of LLMs, their advantages, and drawbacks, the next step is to consider the evaluation process.

- Understand your use case: Start by clearly defining your purpose and the specific tasks you want the LLM to perform. Understand the nature of the content generation, language understanding, or data processing required.

- Inference speed and precision: Practicality is key when evaluating LLMs. Consider inference speed for large data sets; slow processing can hinder work. Choose models optimized for speed or handling big inputs, or prioritize precision for tasks like sentiment analysis, where accuracy is paramount and speed is secondary.

- Context length and model size: This is a vital step.While some models have input length limits, others can handle longer inputs for comprehensive processing. Model size impacts infrastructure needs, with smaller models suitable for standard hardware. Yet, larger models offer enhanced capabilities but require more computational resources.

- Review pre-trained models: Explore existing pre-trained LLMs like GPT-4, Claude, and others. Assess their capabilities, including language support, domain expertise, and multilingual capabilities, to see if they align with your needs.

- Fine-tuning options: Evaluate whether fine-tuning the LLM is necessary to tailor it to your specific tasks. Some LLMs offer fine-tuning options, allowing you to customize the model for your unique requirements.

- Testing and evaluation: Before making a final decision, conduct testing and experimentation with the LLM to evaluate its performance and suitability for your specific tasks. This may involve running pilot projects or conducting proof-of-concept trials. Such as assessing LLM outputs against labeled references allows for the calculation of accuracy metrics.

- Ethical and security considerations: Assess ethical and security implications, especially if the LLM will handle sensitive data or generate content that may have legal or ethical implications.

- Evaluate cost: Factor in the cost associated with using the LLM, including licensing fees, computational resources, model size, and any ongoing operational expenses. Utilize optimization methods such as quantization, hardware acceleration, or cloud services to enhance scalability and lower costs.

- Licensing and commercial use: Selecting the right LLM involves careful consideration of licensing terms. While some open models come with restrictions on commercial use, others permit commercial applications. It’s crucial to review licensing terms to ensure they align with your business requirements.

Examples of popular large language models (LLM)

1. GPT (Generative Pre-trained Transformer) models:

GPT-3, GPT-4, and their variants, developed by OpenAI, have gained popularity for their text generation capabilities and versatility in various language tasks. Now with GPT-4V, it is venturing out in the multimodal LLM space.

2. BERT (Bidirectional Encoder Representations from Transformers):

Developed by Google, BERT is renowned for its ability to understand context bidirectionally, making it a staple in NLP tasks.

3. Claude:

Developed by Anthropic, Claude is specifically designed to emphasize constitutional AI. This approach ensures that Claude’s AI outputs adhere to a defined set of principles, making the AI assistant it powers not only helpful but also safe and accurate.

4. LLaMA (Large Language Model Meta AI):

Meta’s 2023 LLM, boasts a massive 65 billion parameter version. Initially restricted to approved researchers and developers, it’s now open source, offering smaller, more accessible variants.

5. PaLM (Pathways Language Model):

Google’s PaLM is a massive 540 billion parameter transformer-based model that powers the AI chatbot Bard. PaLM specializes in reasoning tasks like coding, math, classification, and question answering. Several fine-tuned versions are available, including Med-Palm 2 for life sciences and Sec-Palm for accelerating threat analysis in cybersecurity deployments.

6. Orca:

A Microsoft creation with 13 billion parameters, is designed to run efficiently even on laptops. It enhances open source models by replicating the reasoning capabilities of LLMs, delivering GPT-4 performance with fewer parameters, and matching GPT-3.5 in various tasks. Orca is based on the 13 billion parameter version of LLaMA.

These Large Language Models have reshaped the landscape of natural language processing, enabling groundbreaking advances in communication, information retrieval, and artificial intelligence.

Having said that, for enterprise usage, generic LLMs, while impressive, often lack the depth and nuance needed for specialized domains, making them more susceptible to generating inaccurate or irrelevant content. This limitation is particularly evident in the form of hallucinations or misinterpretations of domain-specific information. In contrast, specialized or fine-tuned LLMs are tailored to possess in-depth knowledge of industry-specific terminology, enabling them to accurately understand and generate content related to particular concepts that may not be universally recognized or well-understood by generic language models.

Leveraging such specialized LLMs could give an edge to enterprises looking to use LLMs for very specific functions and use-cases based on their own data.

Conclusion

LLMs represent a transformative leap in artificial intelligence, fueled by their immense scale and deep learning capabilities. These models have their roots in the evolution of language models dating back to the early days of AI research. They serve as the backbone of NLP applications, revolutionizing communication and content generation.

While LLMs specialize in language-related tasks, they are now extending into multimodal domains, processing and generating content across text, images, and code. Their versatility has led to widespread adoption across various industries, from coding assistance to content generation, translation, and sentiment analysis. And this adoption is only expected to increase with specialized LLMs, new multimodal capabilities, and further advancement in this field.

While they are already showcasing significant impact when it comes to enterprise usage across multiple functions and use-cases, LLMs are not without challenges, including biases in training data, ethical concerns, and complex interpretability issues. Enterprises must carefully evaluate these models based on their specific use cases, considering factors like inference speed, model size, fine-tuning options, ethical implications, and cost. In doing so, they can harness the immense potential of LLMs to drive innovation and efficiency in the AI landscape, transforming the way we interact with technology and information.