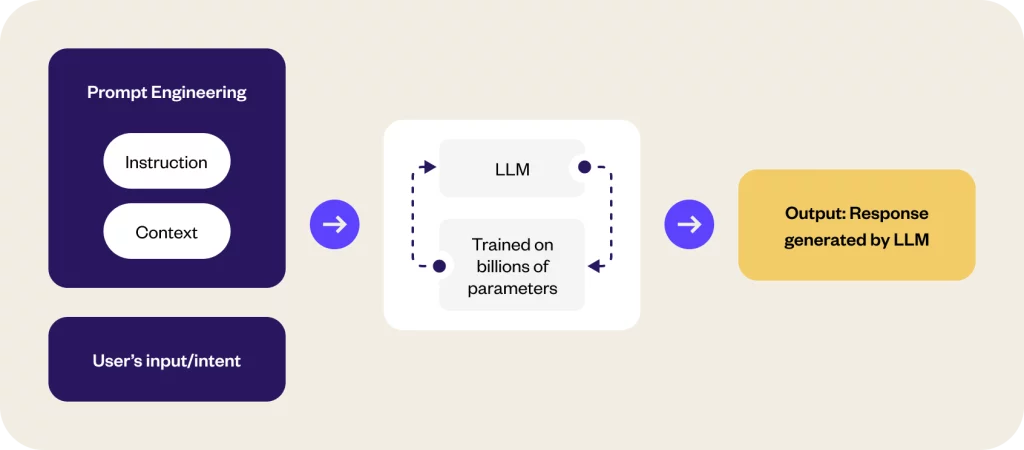

Large language models (LLMs) are deep learning models that can generate and understand text. As we all know, LLMs are trained on massive datasets to perform a variety of tasks, such as translation, summarization, and text generation. This is a simple concept where users input a sequence of texts and the LLM provides an answer with the help of its training. However, there is one variable here: prompts.

Prompts are specific input instructions or queries from LLMs to generate desired responses. These prompts can be in various forms like text, questions, or commands. Prompt engineering is a process that aims to optimize the interaction with the AI model to generate high-quality, relevant, and coherent responses.

Why does prompt engineering matter?

Prompt engineering is a fundamental aspect of using AI models effectively, and it matters for several important reasons.

- LLMs are powerful tools, but they can be difficult to use effectively. Prompt engineering guides LLMs to be more specific and focused in their outputs by reducing ambiguity.

- Quality prompts lead to a better user experience across different use cases such as customer support, lead generation, customer feedback, etc.

- Well-designed prompts streamline interactions with AI, saving time and resources.

- By incorporating relevant information and preferences into prompts, we can personalize AI responses, making them more engaging, relevant, and useful.

Pitfalls of creating prompts manually

Prompt engineering is currently done by engineers and users who manually write prompts. However, this approach comes with several pitfalls:

Ineffective prompts: Ambiguities and vague language can confuse LLMs, leading to misinterpretation. Addressing bias is also crucial to prevent biased content.

Complex and time-consuming: Crafting meaningful, goal-driven prompts demands time. Moreover, it requires time and iteration to incorporate all the scenarios beforehand.

Limited experimentation: It is difficult to evaluate the quality of a prompt without actually running it through an LLM. This can lead to trial and error, which can be time-consuming and inefficient.

Adapting prompts by model: A prompt written for an LLM may not work for another model. For example, a GPT 3.5 written prompt is incompatible with a GPT 4 prompt, which can lead to duplicate efforts in crafting the same prompt for different models.

The solution: Introducing Yellow.ai Prompt Generator

To overcome these challenges, we have introduced an automated prompt generator. Yellow.ai Prompt Generator autonomously creates well-structured and effective prompts to achieve specific business goals. It streamlines and accelerates the process of generating LLM instructions, making it more efficient and accessible. Here is the comparison between a manual and automated prompt generator.

| Parameters | Crafting prompts manually | With Yellow.ai Prompt Generator |

|---|---|---|

| Quality | Subpar prompts | High-quality prompts |

| Expertise | Requires a highly skilled prompt engineer to craft high-quality prompts | No need to learn prompt engineering |

| Time | 2–4 hours per manual prompt | Autonomously generates prompt in seconds |

| Use case coverage | Limited coverage: Requires multiple rounds of testing and evaluation to cover most of the use cases within the prompt | Maximum coverage: The “Improve Prompt” feature provides a list of use cases that have been missed and enhances the generated prompt without much testing. |

Let’s take a look at the demo to understand how it works.

Business benefits

Yellow.ai’s Prompt Generator empowers businesses with more efficient, accurate, and scalable conversations, achieving business goals and delivering enhanced value. Here are a few high-level benefits:

- Increased productivity: Businesses can improve operational efficiency by automating prompt creation for LLMs. This enables teams to focus on higher-value tasks.

- Time savings: Streamlines the process of creating prompts, reducing time and effort. This efficiency leads to faster decision-making and response times.

- Improved quality: Automated prompts ensure that they are consistently structured and aligned with the intended tasks. This consistency helps businesses improve the quality of the outputs generated by LLMs.

- Reduced costs: Generate more outputs from LLMs, without having to hire more employees to create prompts, resulting in reduced costs.

- Enhanced customer experience: Create more personalized and engaging conversations with customers. For example, businesses can create personalized product recommendations for customers, or suggest alternate actions tailored to the interests of specific customer segments.

- Customization: With our “Make prompts smarter” feature, businesses can tailor prompts to their specific domains, industries, or use cases.

Want to see the Yellow.ai prompt generator in action?