Recently, when a Chinese hedge fund DeepSeek unveiled its R1 model – trained for just $6 million – it marked a pivotal moment in the economics of AI. The implications go far beyond the attention-grabbing price tag. What we’re witnessing is a fundamental shift in how AI models will be developed, deployed, and evolved. The proof is in the pudding, just as everyone was diving deeper into DeepSeek’s R1, OpenAI launched its latest o3-mini a few days later.

The landscape of LLMs is evolving at an unprecedented pace, the rules are bending like never before. Release cycles that once stretched over years are now compressed into weeks. This also means the rapid evolution of capabilities that enterprises need to be ready for.

As someone who works closely with enterprise leaders across industries, one question consistently emerges:

“How do we build an AI strategy that won’t be obsolete by the time we implement it?”

The answer is both simple but critical – You shouldn’t be thinking about keeping up with the latest models – but about creating an architecture and approach that thrives in an environment of constant evolution.

Food for thought: Your Choice of LLM Vendor May Future-Proof Your Worst Nightmare

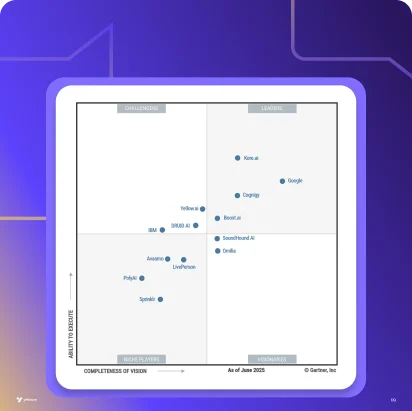

In the rush to implement AI, many enterprises are inadvertently building themselves into a corner. Often, taking a leap of faith with emerging technologies seems safer when it’s with the biggest names. This reflex has served well in traditional enterprise software. However, the AI landscape operates differently. The single-LLM-vendor approach that seemed efficient even till a year ago is increasingly looking like a strategic liability. Here’s why your current vendor selection might be creating future challenges you’ll need to untangle:

Pitfall 1: The Cost of Single-Vendor Models

When you commit to a single LLM vendor, whether it’s OpenAI’s GPT-4, Anthropic’s Claude, or Google’s Gemini – the initial pricing might seem straightforward. However, as your usage scales and use cases multiply, costs can spiral unpredictably. More critically, you lose the leverage to adopt more cost-effective solutions when they emerge, which in turn means you may get locked in paying premium prices for suboptimal solutions from the single LLM vendor. DeepSeek’s latest model demonstrates how dramatically the economics are shifting – but only organizations with flexible architectures can take advantage of these developments.

Pitfall 2: The One-Size-Fits-All Trap

Most enterprises start with a handful of AI use cases, but as adoption grows, the need for specialized capabilities becomes apparent. Your customer service might need a model optimized for conversation, while your development team requires superior code generation. With a single vendor, you’re forced to use a one-size-fits-all solution for distinctly different needs. This becomes particularly problematic as specialized models emerge that could handle specific tasks more efficiently, often at a fraction of the cost. The real trap isn’t just in pricing – it’s in being unable to match the right model to the right task as your AI usage matures.

Pitfall 3: When “Good Enough” Becomes Your Ceiling

Today’s leading model might seem sufficient for your current needs, but the pace of AI evolution is at a breakneck speed. We’ve moved from a world where software release cycles stretched over months or years to one where new, more capable models emerge weekly. Just look at recent weeks – DeepSeek’s release was quickly followed by new models by OpenAI, each pushing capabilities further. In this landscape, being locked into a single LLM vendor means your ceiling isn’t just their current capability – it’s their ability to keep pace with an increasingly crowded and innovative field.

Pitfall 4: Single Points of Failure in Enterprise AI

When your entire AI infrastructure depends on one LLM vendor, you’re not just betting on their technology, but their entire business trajectory. In enterprise environments, when systems fail, they fail at scale – affecting thousands of customers, disrupting critical operations, and creating cascading problems across departments. This vulnerability becomes particularly acute in areas like compliance and security. A single vendor’s policy change, a compliance mishap, or a security breach doesn’t just affect one system – it ripples through your entire AI infrastructure. Moreover, the cost of these failures multiplies exponentially in enterprise scenarios. It’s not just about the immediate financial impact – it’s about damaged customer relationships, lost productivity across teams, and the resource-intensive process of implementing emergency solutions.

Pitfall 5: Lost Optionality in an Expanding AI Landscape

Perhaps the most significant hidden cost is the opportunity cost. The AI market is expanding rapidly, with new models and capabilities emerging weekly. Open source alternatives are becoming increasingly sophisticated, and specialized models are proving superior for specific tasks. This level of validation and control becomes crucial as AI drives more critical business functions. When you’re architecturally committed to a single vendor, you’re not just missing today’s alternatives – you’re losing the ability to build trust through transparency and adapt to tomorrow’s innovations.

5 Ways To Ensure Your AI Strategy Adapts To Evolving Technology

Now that we’ve identified the potential pitfalls, let’s focus on building an AI strategy that stays resilient and adaptable. Drawing from my experience working with enterprises across industries, here are five key approaches that can help you build a future-ready AI infrastructure:

1. Create a Flexible Foundation

The key to adaptability lies in your architecture. Build an abstraction layer that separates your applications from the underlying AI models. This means creating middleware that can interface with different LLMs, allowing you to switch models without disrupting your applications. Think of it as building a universal adapter – when a new, more efficient model emerges, you can plug it in without rewiring your entire system.

2. The Right Tool for Each Job

Instead of forcing one model to handle everything, adopt a best-in-breed approach. Different tasks demand different strengths – GPT-4 might excel at creative writing, while Claude shines at analysis, and specialized models could be more cost-effective for specific tasks. By matching models to tasks, you not only optimize performance but also maintain cost efficiency.

3. Own Your Data’s Journey

Your data is your competitive advantage. Invest in infrastructure that keeps you in control of your data’s movement between different AI models and systems. This means building strong data pipelines, maintaining clear governance, and ensuring your data remains portable without being locked into any single vendor’s format. Remember, the ability to move your data freely is key to staying agile.

4. Plan for the Unexpected

Resilience isn’t optional in enterprise AI deployments. The key is to select automation platforms with a modular, multi-LLM architecture that allows for seamless model switching and redundancy. These platform architectures should be flexible enough to integrate different models as needed – whether it’s for better performance, cost optimization, or as fallback options. This approach means when disruptions occur – whether technical, commercial, or compliance-related – you can quickly adapt without disrupting operations. Your AI infrastructure needs to be ready not just for the changes we can predict, but for the innovations and challenges we haven’t yet imagined.

5. Monitor Market Evolution

Staying competitive means having a systematic approach to evaluating rapid developments in technology. Set clear benchmarks that matter for your business – whether it’s accuracy in customer service, speed in code generation, or cost per query in data analysis. When new models emerge, you should be able to quickly assess if they could deliver better results for specific tasks. This isn’t a theoretical exercise – it’s about maintaining a competitive edge in a landscape where last month’s cutting-edge can quickly become today’s baseline. Of course, you don’t have to go at it alone – partnering with vendors who have a strong track record of integrating emerging models and maintaining a forward-looking roadmap can give you the same advantages while reducing the evaluation burden.

Final Thoughts

The rapid evolution of LLMs – from DeepSeek R1, OpenAI o3-mini to whatever comes next week – isn’t slowing down. The reality is clear: enterprises need architectures that can evolve as quickly as the models themselves. It’s no longer about picking the right model, but building systems flexible enough to leverage the best capabilities as they emerge. Success with AI won’t be determined by which model you choose today, but by how well your architecture adapts to the innovations of tomorrow.

Let’s Talk

The race to adapt to evolving AI capabilities isn’t slowing down. If you’re grappling with the challenges of building a flexible AI strategy for your enterprise, I’d be happy to discuss how we can help you navigate these waters.