“The winners in this business cycle won’t be the most technical,” Ben Edwards told me during our recent LinkedIn Live conversation. “They’re going to be the businesses that are the most adaptable. And maybe even more important; they’re the ones who are gonna be most honest about what is and isn’t working inside of their business.“

That insight cuts to the heart of a troubling paradox we’re witnessing across enterprise organizations today. Despite record investment in AI technologies, customer satisfaction scores continue to decline. Companies are deploying more sophisticated tools than ever before, yet somehow connecting with customers less than they did a year ago.

The culprit? What we call the “honesty gap”—the uncomfortable distance between what executives think is broken in their organization versus what’s actually causing friction for customers and employees every day.

This isn’t about chasing AI hype or jumping on the latest technological bandwagon. This is about using AI to fix what’s genuinely not working in your business today. And that requires a level of organizational self-awareness that many enterprises struggle to achieve.

Between the two of us, we’ve easily had over 4,000 conversations with enterprise leaders about their AI journeys this year alone. Me (Vikas) from the sales and strategy side, and Ben from the implementation and partner enablement side. What we’ve learned is that the organizations seeing real success aren’t the ones with the biggest AI budgets or the most sophisticated roadmaps. They’re the ones willing to look honestly at their operations and start with the truth about where they’re falling short.

What Thousands of Customer Conversations Taught Me About AI Readiness

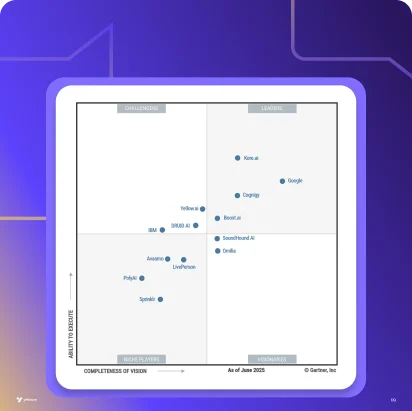

By Vikas Bhambri, SVP Sales at Yellow.ai

If you spend any time on LinkedIn these days, you’ve probably seen posts from executives announcing mandates like “I cannot hire another person in my department until I test out AI to solve the gap.” This top-down pressure is real, and it’s accelerating across every function—from CFOs and chief people officers to heads of customer experience and operations.

But here’s what I’ve learned from thousands of sales conversations: there’s a vast difference between organizations that say “we need AI” and those that can articulate “here’s our specific problem that AI might solve.“

1. The AI-Curious vs. AI-Ready Organizations

The AI-curious organizations approach conversations with excitement about possibilities. They ask questions like “What can AI do for us?” and “How quickly can we see transformation?” These conversations often involve large stakeholder groups trying to build comprehensive roadmaps that address every potential use case.

The AI-ready organizations, on the other hand, start conversations differently. They lead with specific pain points: “Our contact center agents are spending 90 seconds after every call typing notes instead of helping the next customer.” Or “We get crushed by appointment scheduling calls every Monday morning, and it’s taking our staff away from more critical patient interactions.“

This difference in starting point predicts success more than any other factor I’ve observed.

2. The AI Mandate and Reality Check

What’s particularly interesting is watching how the current mandate pressure plays out. Executives are being told to explore AI before expanding teams, but many discover that successful AI implementation isn’t as straightforward as they initially thought.

Even when a department head is “all in” on AI, they quickly realize they still need IT for data access and security, compliance for regulatory requirements, and often legal for vendor relationships. The desire to move fast runs into the reality of organizational complexity.

But here’s where the honest organizations separate themselves: instead of getting frustrated by these requirements, they view them as an opportunity to build the right foundation from the start.

Related: The Impossible Ask of Delivering World-class CX at Scale While Cutting Costs

3. Why the Starting Point Predicts Success

The prospects who move quickly from evaluation to implementation share several characteristics:

They can describe friction points in operational terms, not strategic abstractions. Instead of “we need to improve customer experience,” they say “our average handle time increased 23% last quarter because agents are struggling with our new knowledge base system.“

They understand that AI amplifies existing processes—good or bad. If your current customer service process is broken, AI will just break it faster and at a greater scale. The honest organizations fix the underlying process issues first.

They’re realistic about timelines and success metrics. Rather than expecting immediate transformation, they’re looking for specific improvements in measurable areas within 8-12 weeks.

Perhaps most importantly, they view AI implementation as an iterative process rather than a one-time project. They understand that the goal isn’t to deploy perfect AI, but to deploy useful AI that gets better over time. Given that AI is self-learning and autonomous, it’s a much better starting point than traditional automation.

The Implementation Reality: Why Good Intentions Go Wrong

By Ben Edwards, Director of CX and AI at Sandler Partners

From the implementation trenches, I can tell you that the gap between AI enthusiasm and AI success often comes down to how organizations approach the early decisions—particularly around team size and scope.

1. The Committee Trap

The biggest implementation killer I see is what I call the committee trap. Organizations feel like they need to get “everyone on board” before moving forward, so they assemble steering committees with representatives from every business unit that might be impacted by AI.

Here’s the problem: due to the maturity of AI right now and the speed to implementation we can achieve, these large committees actually slow you down more than they help. While you’re trying to build consensus among 15 stakeholders with different priorities and concerns, your competitors are deploying solutions and building momentum.

I’m not saying stakeholder input isn’t important—it absolutely is. But there’s a difference between consultation and decision-making authority. The most successful implementations I see limit the core decision-making team to three types of people:

- someone who owns the challenge you’re trying to solve

- someone who can say yes to moving forward, and

- someone who knows about the data and systems you’ll need to access

Everyone else can be consulted and kept informed, but they don’t need to be in the decision-making process for every choice.

Related: A Guide to Aligning Varied Strategic Goals for Success with AI agents

2. Searching for Use Cases in Strategy Documents

The organizations that succeed are the ones that start with their frontline folks. I always suggest talking to contact center leadership, agents, analysts, and operations people first. They know where the friction is—not the theoretical friction that shows up in strategy documents, but the practical, everyday friction that makes their jobs harder and customers more frustrated.

It’s remarkable how often a simple conversation reveals obvious starting points. I’ve sat with contact center leaders who, when asked “What’s the one inquiry that your agents get all the time that they just hate answering?” immediately identify three perfect use cases for AI automation.

3. Deploying AI That Makes Jobs Harder

One thing I’ve learned from failed AI implementations is that employee trust is absolutely critical. I’ve seen organizations deploy “helpful” AI tools that employees actively avoid because the tools consistently provide incorrect information or create more work instead of less.

The key to building trust is transparency about what AI can and cannot do, and ensuring that the first implementations genuinely make people’s jobs easier rather than more complicated.

Real-time agent assist is often more successful than post-call coaching because agents can see immediate value. When AI surfaces the right knowledge base article during a customer interaction, or suggests the appropriate coupon code to resolve a complaint, agents quickly understand how the technology helps them deliver better service.

Conclusion: It’s Crucial to Get Started Right

The next 6-18 months represent a critical window for enterprise AI adoption. The technology is mature enough to deliver value quickly, but requires thoughtful implementation and realistic expectations. The competitive advantage goes to organizations that move from evaluation to value creation faster than their peers.

AI isn’t coming for our jobs. But it is coming for bad processes, legacy tools, and anything that builds friction between you and your customers.

The organizations that are honest about these friction points and willing to address them systematically will have a significant advantage over those that wait for perfect plans or try to solve everything at once.

A Practical Framework

Based on our combined experience, here’s a practical framework for conducting your own organizational honesty audit:

- Friction Assessment: Have direct conversations with your frontline teams. Ask agents, analysts, and operations people where they struggle most. Don’t rely on management reports or strategic documents—talk to the people who experience customer interactions every day.

- Stakeholder Right-Sizing: Identify the three key people for your initial AI implementation: someone who owns the problem, someone who can authorize the solution, and someone who understands the data and systems. Everyone else can provide input, but they don’t need decision-making authority.

- Success Definition: Define what measurable impact looks like in operational terms. How will you know if AI is actually helping? Focus on metrics that matter for both customer experience and business efficiency, not just automation rates.

- Partnership Evaluation: Be honest about your internal expertise. Can you optimize large language models for your specific use cases? Do you have the security and compliance expertise for enterprise AI deployment? Partnership with the right providers isn’t outsourcing—it’s leveraging specialized knowledge to move faster and reduce risk.

Your Next Step

If you’re thinking about AI implementation, start with a conversation with your frontline team and assess past customer interactions to identify high-volume, repetitive friction points.

Define success in numbers—minutes saved per interaction, calls deflected per day, or resolution rate improvements.

Build momentum through early wins rather than perfect plans, because in our honest opinion, the organizations that prioritize honesty over hype, speed over perfection, and real problems over theoretical transformations will be the ones that turn AI investment into actual competitive advantage.

Got questions? Send them my way.