As AI agents become the primary interface between enterprises and customers, ensuring their reliability becomes crucial. Organizations need confidence that their AI will perform consistently across every interaction, not just the expected scenarios they anticipated and planned for.

After helping customers deploy hundreds of enterprise AI agents this year, we’ve discovered that traditional testing methods – designed for predictable, deterministic software – simply aren’t built for AI’s probabilistic nature. A single change can ripple through thousands of conversational scenarios in ways manual testing will never catch. Today’s AI agents require testing infrastructure that can systematically validate behavior across thousands of potential conversation paths.

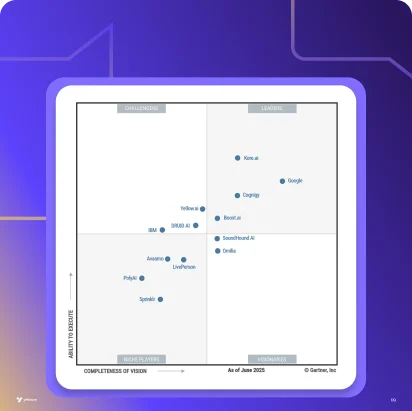

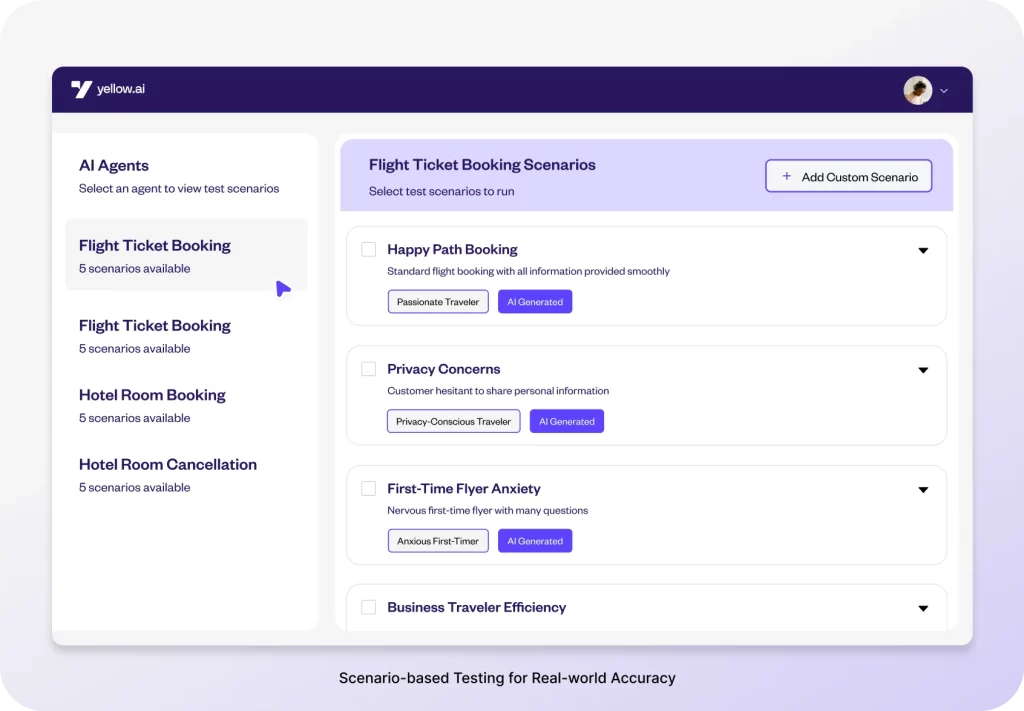

At Yellow.ai, we’ve built Agentic AI-powered Automated Testing specifically for this new reality, to ensure your AI agents perform reliably across every scenario your customers might create.

Why Manual Testing Falls Short for Modern AI Agents

Manual testing approaches break down when applied to AI agents for three fundamental reasons:

First, the SCENARIO EXPLOSION problem. A customer service AI agent needs to handle hundreds of intent types, across multiple personas, through various channels, in different languages. Each conversation can take dozens of turns with branching paths. The combinatorial complexity quickly becomes impossible to test manually.

Second, the CONSISTENCY challenge. AI agents don’t just need to give correct answers, they need to understand context, maintain consistent tone, brand voice, and reasoning patterns across all interactions. Subtle variations that humans might miss in spot-checking can compound into significant brand inconsistencies at scale.

Third, the REGRESSION DETECTION gap. When you update an AI system, you’re not just changing specific functions; you’re potentially altering the agent’s reasoning patterns across every possible interaction. Manual testing typically focuses on the changed functionality while missing subtle degradations elsewhere.

One way of trying to fix this would be to hire additional QA staff. Create longer checklists. Run more scenarios by hand before each deployment. But…

It doesn’t scale. And more importantly, it definitely doesn’t work.

The Testing Infrastructure That AI Agents Actually Need

1. Conversation Flow Validation

Challenge: AI agents don’t just execute functions, they conduct conversations. This means testing individual responses isn’t enough. You need to validate entire conversation flows, ensuring agents maintain context, provide consistent information, and guide users toward resolution.

Solution: We’ve found that the most effective approach is scenario-based testing that simulates complete user journeys. Instead of testing isolated Q&A pairs, create test scenarios that mirror real customer intents from initiation through resolution. A billing inquiry test shouldn’t just verify the agent can look up account information, it should simulate the full conversation flow including clarifying questions, explaining charges, and offering next steps.

2. Multi-Dimensional Quality Assessment

Challenge: Traditional pass/fail testing doesn’t capture the nuanced requirements of AI interactions. An agent might provide technically correct information while using the wrong tone for a frustrated customer, or give appropriate responses that don’t align with brand voice guidelines.

Solution: Effective AI testing evaluates multiple dimensions simultaneously – accuracy, brand alignment, empathy, safety, and reasoning quality. For each conversation scenario, automated testing should assess whether the agent not only provided correct information, but did so in a way that matches your organization’s standards for customer interaction.

3. Scale and Regression Detection

Challenge: AI systems need continuous validation, not point-in-time testing. Every prompt adjustment, knowledge base update, or model version change requires comprehensive regression testing across all agent capabilities.

Solution: This is where automation becomes essential. Comprehensive test suites need to run automatically with every change, validating performance across numerous scenarios to catch degradations that manual testing would miss.

The Operational Impact of Automated Testing of AI Agents

The reason why we feel like it makes such a critical part of our platform is because comprehensive AI testing infrastructure enables several critical operational improvements:

- Deployment confidence increases dramatically when you can validate agent performance across thousands of scenarios before going live. Instead of hoping the agent will perform well, teams will know exactly how it handles every critical business scenario.

- Development velocity accelerates because teams can iterate rapidly without fear of breaking existing functionality. Comprehensive regression testing provides the safety net that enables experimentation and optimization.

- Customer experience improves when every interaction meets validated standards for accuracy, brand voice, helpfulness and security. Customers receive reliable service regardless of when they interact with the agent or which specific conversation path they follow.

- Operational risk decreases significantly when issues are caught before reaching customers. The cost of fixing problems in development is a fraction of the cost of addressing customer-facing failures.

Building Trust Through Systematic Validation

A decade of working with enterprises at Yellow.ai has taught us that AI reliability isn’t about perfect models. It’s about predictable behavior. Organizations need to feel confident that their AI will perform consistently across channels and interactions, not just perform well occasionally. This trust can’t be built through hope or manual spot-checking, it requires systematic, continuous validation.

To that end, we’re launching our automated testing for general availability soon which will include novel features like:

- Auto-generation of test cases – The AI Intelligently extracts test scenarios from existing SOPs, knowledge bases, and customer interactions.

- Proactive analysis of issues before deployment – The AI autonomously flags risks, spotting edge cases and failure patterns across thousands of scenarios simultaneously.

- Simulation of real-world scenarios – The AI simulates end-to-end conversations that mirror actual customer behavior patterns, ensuring agents guide users effectively through complex multi-turn interactions.