We’ve been watching the enterprise AI space wrestle with a fundamental problem: everyone’s building smarter individual AI agents, but nobody’s figured out how to make them work together. What ends up happening is companies ending up with a dozen different agents, one for HR, another for finance, maybe three more for different support functions, and users get bounced around like pinballs. It’s frustrating for employees, expensive to maintain, and frankly, it makes AI look bad and doesn’t drive either efficiency or ROI.

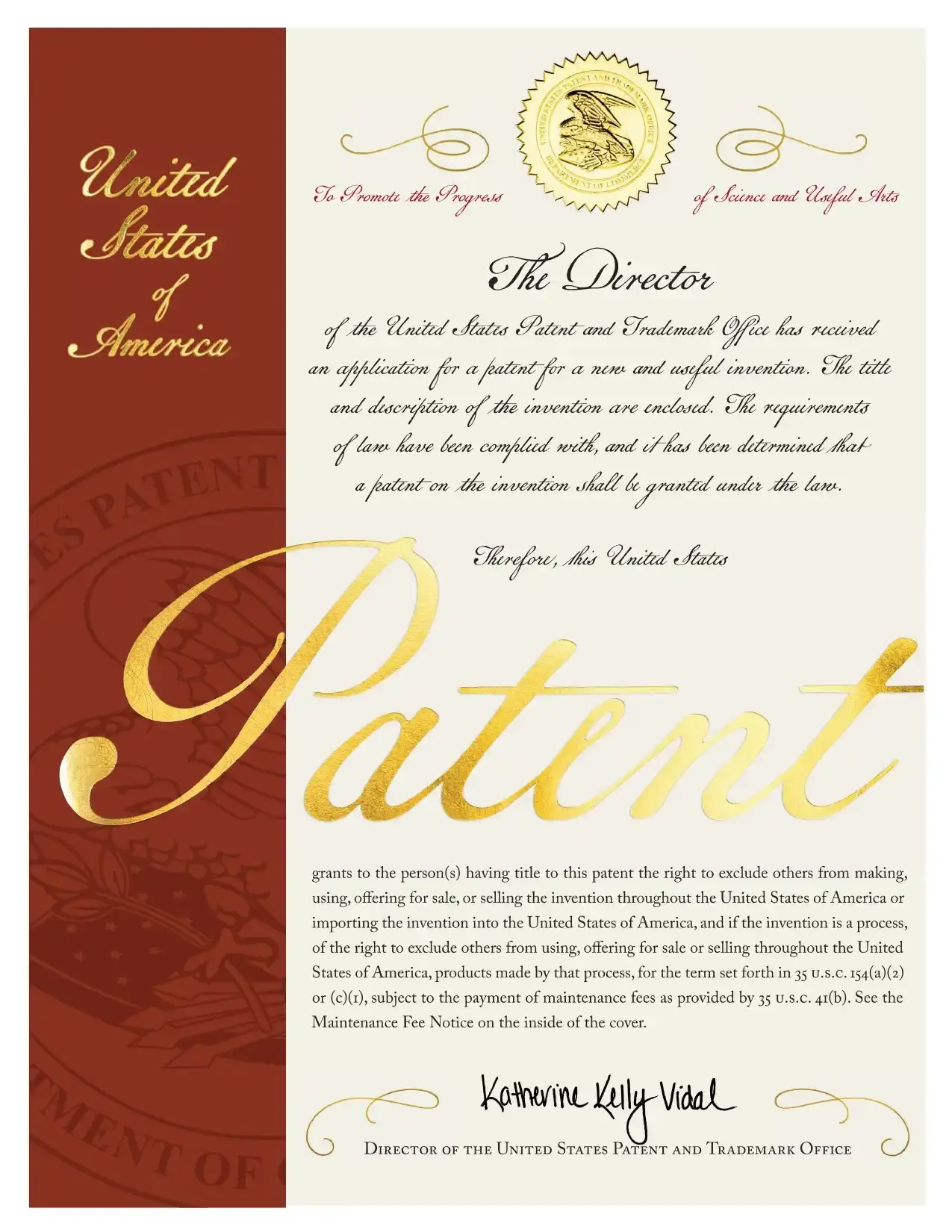

Three years ago, we saw this coming. While others were focused on making individual assistants more capable, we were building the orchestration layer that could coordinate multiple specialized agents. That vision is codified in U.S. Patent 11,909,698—our blueprint for enterprise-scale agentic AI that actually works.

The Problem: Numerous Bots, Fragmentation at Scale

Walk into any large enterprise today and you’ll find AI assistants scattered across departments. Sales has their lead qualification bot. HR rolled out something for leave requests. Finance built their own thing for expense approvals. Each team thought they were being innovative.

The user experience? Terrible. Ask about updating your address and you might get routed through three different systems. Need help with a purchase that affects your benefits? Good luck figuring out which bot handles what. From an IT perspective, it’s even worse. Every bot needs its own monitoring, its own compliance checks, its own maintenance. The governance overhead multiplies with each new assistant, and integration becomes a nightmare.

This isn’t just a UX problem but an architecture problem as well. And architectural problems

need architectural solutions.

What We Built (And Patented)

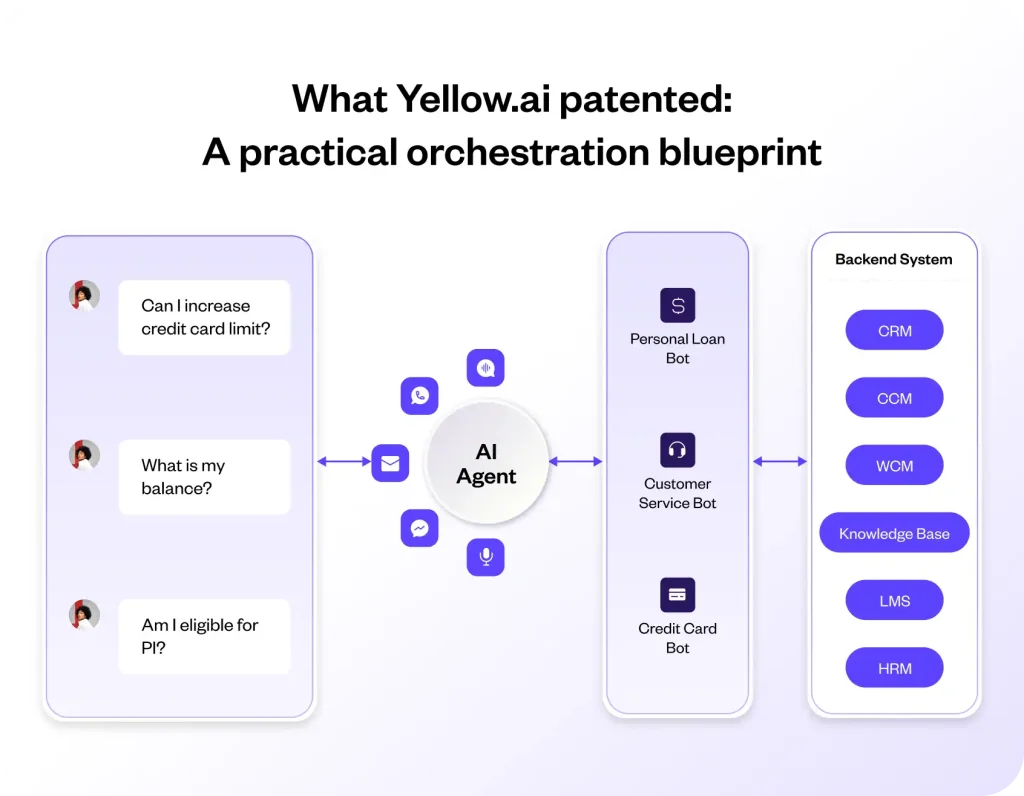

Our patent describes something deceptively simple: a single control plane that figures out which

specialist agent should handle each query. But the devil’s in the details.

When a user asks a question, our system:

- Extracts intent and context; not just keywords, but what the user actually needs

- Scores confidence across all available specialist bots

- Routes immediately if there’s a clear winner, or polls multiple agents if things are ambiguous

- Presents the best response while learning from user choices to improve future routing

The user sees one seamless interface. Behind the scenes, we’re orchestrating a network of

specialist AI agents.

What makes this enterprise-ready is the governance layer added on top of this intelligent routing capabilities. One admin panel to manage thresholds, set routing rules, monitor performance, and ensure compliance across all your agents. This empowers businesses to scale their AI coverage without multiplying operational overheads and efforts.

The crucial evolution: from Traditional NLP to LLMs

When we first filed this patent, we were working with traditional NLP, lots of intent classification

and rule-based routing. Functional, but brittle. Then LLMs arrived, and everything accelerated:

- Deeper contextual understanding. LLMs can hold longer conversations, grasp nuance and multi-turn context, and eliminate the brittleness that plagued classic slot-and-intent systems.

- Superior fallback and synthesis. Where our rule-based fallbacks used to return awkward or generic responses, LLMs can synthesize partial answers, summarize documents, and present consolidated replies drawn from multiple specialist bots.

- Seamless tool and retrieval integration. Modern LLMs work naturally with retrieval-augmented generation (RAG) and tool-calls to APIs, databases, and CRMs. This means our orchestrator can ask an LLM to gather facts, call external tools, and then route to the best specialist to complete the transaction.

- Stronger confidence signals. Embedding similarity and LLM-derived confidence scores make routing decisions far more reliable, reducing user friction and eliminating those frustrating handoffs between bots.

But here’s what people miss: LLMs didn’t replace the need for orchestration, they made it more powerful. An LLM without orchestration is just a very capable individual assistant. Orchestration without modern LLMs is routing yesterday’s technology. Together, they unlock something enterprises actually need: coordinated intelligence that can handle real business complexity.

Why This Matters Now

The agentic AI wave is real, but most implementations will fail for the same reason early chatbot deployments failed: they’re solving individual use cases instead of building platforms.

We learned this lesson years ago. Every enterprise AI deployment eventually hits the coordination problem. You can build the world’s best HR assistant, but if it can’t hand off cleanly to your finance bot when someone asks about payroll taxes, you’ve created more friction, not less.

The companies that get this right will be the ones that build orchestration into their foundation and will, consequently have sustainable competitive advantages. They’ll deploy new capabilities faster, maintain better user experiences, and actually get ROI from their AI investments.

The ones that don’t will end up with expensive, fragmented systems that confuse users and drain IT resources.

What’s Next

We’re already seeing the early signs of what orchestrated agentic AI can do. Multi-step workflows that span departments. Proactive outreach based on cross-system insights. Tool orchestration that actually works at enterprise scale.

The trick is to stop thinking about AI as individual assistants and start thinking about it as coordinated intelligence.

If you’re responsible for digital transformation, customer experience, or enterprise operations, the question isn’t whether you need orchestration. It’s whether you’re building it into your foundation now, or retrofitting it later when the coordination problem becomes impossible to ignore.

We built our orchestration layer years ago because we saw this problem coming. Now that vision is validated both in the market and in the patent system.

If you want to see what orchestrated agentic AI looks like in practice, let’s talk.